import os

import warnings

import time

os.environ['USE_PYGEOS'] = '0' # Force use of shapely>2.0

# Data containers

import geopandas as gpd

import numpy as np

import pandas as pd

import xarray as xr

# Plotting

import hvplot.pandas

import hvplot.dask

import holoviews as hv

from holoviews.element.tiles import EsriTerrain

# GDPTools

from gdptools import WeightGen

from gdptools import AggGen

from gdptools import UserCatData

warnings.filterwarnings('ignore')

hv.extension('bokeh')Use Cases

poly-to-poly-interpolation

Keywords: gdptools; spatial-interpolation; polygon-to-polygon interpolation;

Domain: Hydrology

Language: Python

Description:

This notebook demonstrates the generation and aggregation from one polygon source to another polygon target. The example here demonstrates mapping USGS National Hydrologic Model output to CONUS HUC12s. Calculation of area weighted statistics as shown here is useful process in many water budget or landscape analysis applications.

Polygon-to-polygon weight generation and aggregation.

This notebook demonstrates the generation and aggregation from one polygon source to another polygon target. The example here demonstrates mapping USGS National Hydrologic Model output to CONUS HUC12s. Calculation of area weighted statistics as shown here is useful process in many water budget or landscape analysis applications.

Why

In the use-case presented here we are interpolating hydrologic modeling results of soil moisture, calculated on a unique spatial fabric of polygons representing Hydrologic Response Units (HRU) which are often represented as polygons splitting a catchment on either side of the stream network of that catchment to a more common fabric such as 12 digit hydrologic unit codes (HUC12). It could be other polygons for example representing states or counties. To interpolate the values, a weights table is calculated which describes the fraction of each contributing HRU to the HUC12. The interpolation is this case is the sum of soil moisture values for each HRU multiplied by the weight for that HRU/HUC12 combination as represented in the weights file. This kind of interpolation is common in hydrologic sciences and this demonstration can be used as a template for other interpolations.

Open source python tools

- gdptools: GeoDataProcessing Tools (GDPTools) is a python package for geospatial interpolation

- Geopandas: Geopandas is a python package for geospatial dataframes.

Data

- conda/mamba environment file ‘environment.yml’ https://www.sciencebase.gov/catalog/item/64cbcc52d34e2d58db2fa44b.

- Source Geometry: GFv1.1.gdb.zip available via sciencebase https://www.sciencebase.gov/catalog/item/5e29d1a0e4b0a79317cf7f63. https://doi.org/10.5066/P971JAGF

- Source Data: nhm_sm_subset.nc A 1-month subset of NHM ouput for the period 2020-01-01 - 2020-01-31. This dataset if for demonstration purposes only. It containts the output for Total soil-zone water storage. https://www.sciencebase.gov/catalog/item/64cbcc52d34e2d58db2fa44b

- Target Data: WBD_National_GDB.gdb available via sciencebase https://www.sciencebase.gov/catalog/item/63cb38b2d34e06fef14f40ad at the link https://prod-is-usgs-sb-prod-publish.s3.amazonaws.com/63cb38b2d34e06fef14f40ad/WBD_National_GDB.zip. https://doi.org/10.5066/P92U7ZUT

Description

In this use-case we interpolate the Total Soil Moisture output from the National Hydrologic Model (NHM) for a period of 1-month between 2020-01-01 and 2020-01-31 to CONUS twelve digit hydrologic unit code (HUC12). The following steps are performed. The Geometry data for both the NHM Hydrologic Response Units (HRUs) and the twelve HUC12 are read into a Geopandas Dataframe. The NHM ouput file is read into an XArray dataframe. GDPTools is used to create an area-weighted mapping between the source geometry (HRUs) and the target geometry (HUC12s). Then using the weights file the NHM results are interpolated to HUC12s. This demonstrates a common use-case for spatial interpolation from one geometry to another.

Imports

calc_weights = TrueDownload and read data

The source fabric (GFv1.1.gdb), target fabric (WBD_National_GDP), and the source data (nhm_sm_subset.nc) using GeoPandas for the fabrics and XArray for the data. Adjust the paths to the data as necessary.

# Source data

source_fabric = '~/data/GFv1.1.gdb'

source_data = '~/data/nhm_sm_subset.nc'

# Target data

target_fabric = '~/data/WBD_National_GDB/WBD_National_GDB.gdb'

# Read data

start = time.time()

source_gdf = gpd.read_file(source_fabric, layer='nhru_v1_1_simp')

source_fabric_time = time.time() - start

print(f"Source fabric read in: {source_fabric_time} seconds")

start = time.time()

source_ds = xr.open_dataset(source_data)

source_data_time = time.time() - start

print(f"Source data read in: {source_data_time} seconds")

start = time.time()

target_gdf = gpd.read_file(target_fabric, layer = 'WBDHU12')

target_fabric_time = time.time() - start

print(f"Target fabric read in: {target_fabric_time} seconds")Source fabric read in: 22.118669986724854 seconds

Source data read in: 3.0059561729431152 seconds

Target fabric read in: 121.06751918792725 secondsLook at the structure of each data source

source_gdf.head()| nhru_v1_1 | hru_segment_v1_1 | nhm_id | hru_id_nat | Version | Shape_Length | Shape_Area | Change | geometry | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 76127 | 40038 | 76128 | 76128 | 1 | 80441.423292 | 1.881188e+08 | -0.017302 | MULTIPOLYGON (((-105544.567 804074.976, -10541... |

| 1 | 76147 | 40038 | 76148 | 76148 | 1 | 53413.506290 | 4.418597e+07 | 0.054540 | MULTIPOLYGON (((-97185.217 806355.005, -97154.... |

| 2 | 76170 | 40021 | 76171 | 76171 | 1 | 54988.828358 | 7.338919e+07 | 0.018316 | MULTIPOLYGON (((-105894.643 815045.861, -10570... |

| 3 | 76172 | 40019 | 76173 | 76173 | 1 | 31915.213081 | 3.629082e+07 | 0.049861 | MULTIPOLYGON (((-106762.641 815925.020, -10675... |

| 4 | 76181 | 40019 | 76182 | 76182 | 1 | 27390.023934 | 1.567346e+07 | 0.154732 | MULTIPOLYGON (((-109785.311 816675.047, -10978... |

source_ds<xarray.Dataset> Size: 15MB

Dimensions: (nhru: 114958, time: 31)

Coordinates:

* nhru (nhru) int32 460kB 1 2 3 4 5 ... 114955 114956 114957 114958

* time (time) datetime64[ns] 248B 2020-01-01 ... 2020-01-31

Data variables:

nhm_id (nhru) int32 460kB ...

soil_moist_tot (time, nhru) float32 14MB ...

Attributes:

history: Tue Jan 17 19:24:30 2023: ncks -O -4 -L 2 --cnk_map=dmn --cnk_d...

NCO: netCDF Operators version 5.1.0 (Homepage = http://nco.sf.net, C...target_gdf.head()| TNMID | METASOURCEID | SOURCEDATADESC | SOURCEORIGINATOR | SOURCEFEATUREID | LOADDATE | GNIS_ID | AREAACRES | AREASQKM | STATES | ... | NAME | HUTYPE | HUMOD | TOHUC | NONCONTRIBUTINGAREAACRES | NONCONTRIBUTINGAREASQKM | GLOBALID | SHAPE_Length | SHAPE_Area | geometry | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | {B1EF0C55-72ED-4FF6-A3BA-97A87C6A6C47} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 12663.63 | 51.25 | AL | ... | Pond Creek | S | NM | 031401030102 | 0.0 | 0.0 | {8BA048A6-E29C-11E2-8094-0021280458E6} | 0.396873 | 0.004859 | MULTIPOLYGON (((-86.15784 31.42164, -86.15783 ... |

| 1 | {F0D9874D-52BA-4FDC-A5E6-E259B627764D} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 37030.62 | 149.86 | AL | ... | Lightwood Knot Creek | S | NM | 031401030103 | 0.0 | 0.0 | {8BA06091-E29C-11E2-8094-0021280458E6} | 0.845522 | 0.014214 | MULTIPOLYGON (((-86.18406 31.53503, -86.18406 ... |

| 2 | {2E0CB201-5672-45B5-8CA7-A60070122697} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 26011.73 | 105.27 | AL | ... | Poley Creek-Lightwood Knot Creek | S | NM | 031401030302 | 0.0 | 0.0 | {8BA07E87-E29C-11E2-8094-0021280458E6} | 0.663941 | 0.009979 | MULTIPOLYGON (((-86.29029 31.27059, -86.29089 ... |

| 3 | {9D39E120-C6DF-401F-AA8F-1748E9423AA0} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 25800.09 | 104.41 | AL | ... | Yellow River | S | NM | 031401030302 | 0.0 | 0.0 | {8BA0956B-E29C-11E2-8094-0021280458E6} | 0.640934 | 0.009897 | MULTIPOLYGON (((-86.30253 31.45077, -86.30251 ... |

| 4 | {9ED42D2B-5055-43DD-8096-09B30BB23E24} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 34025.86 | 137.70 | AL | ... | Bay Branch Creek | S | NM | 031401030203 | 0.0 | 0.0 | {8BA0B374-E29C-11E2-8094-0021280458E6} | 0.587969 | 0.013044 | MULTIPOLYGON (((-86.38404 31.37613, -86.38361 ... |

5 rows × 21 columns

Simply GeoDataFrame

Generally it’s a good practice to reduce the source geometry to include only the required columns, which are in this case are the feature id used to key the geometry and the geometry itself. Here, the identifiers are ‘nhru_v1_1’ and ‘geometry’ respectively. Simplifying in this way reduces the overall memory footprint when calculating the weights and interpolating the source data to the target fabric.

source_gdf_simp = source_gdf[['nhru_v1_1', 'geometry']]

source_gdf_simp.head()| nhru_v1_1 | geometry | |

|---|---|---|

| 0 | 76127 | MULTIPOLYGON (((-105544.567 804074.976, -10541... |

| 1 | 76147 | MULTIPOLYGON (((-97185.217 806355.005, -97154.... |

| 2 | 76170 | MULTIPOLYGON (((-105894.643 815045.861, -10570... |

| 3 | 76172 | MULTIPOLYGON (((-106762.641 815925.020, -10675... |

| 4 | 76181 | MULTIPOLYGON (((-109785.311 816675.047, -10978... |

Filter HUC12s to those that are within CONUS only and eliminate the Lake Michigan HUC12.

Here we filter HUC12s to represent the land-based HUC12s for CONUS only.

filter_water = ['Lake Michigan']

filter_state = ['MX', 'CN']

filter_huc12 = ['19', '20', '21', '22'] # Alaska, Hawaii, Puerto Rico, Pacific Islands

# Fileter non-conus HUC12s using filter_huc12

print(f"Number of huc12s before filtering: {len(target_gdf)}")

wbd_conus = target_gdf[~target_gdf['HUC12'].apply(lambda x: any(x.startswith(prefix) for prefix in filter_huc12))].copy()

print(f"Number of huc12s after filtering non-conus huc12s: {len(wbd_conus)}")

# Filter polygon representing Lake Michigan

wbd_filt = wbd_conus[~wbd_conus['NAME'].isin(filter_water)].copy()

print(f"Number of huc12s after filtering type: {len(wbd_filt)}")

# Filter polygons that are in Mexico and Canada

wbd_filt1 = wbd_filt[~wbd_filt['STATES'].isin(filter_state)].copy()

print(f"Number of huc12s after filtering states: {len(wbd_filt1)}")

# Filter partial polygons in Mexico and Canada

wbd_filt2 = wbd_filt1[~(wbd_filt1['STATES'].str.contains('CN'))].copy()

print(f"Number of huc12s after filtering Partial Canadian hucs: {len(wbd_filt2)}")

wbd_conus = wbd_filt2[~(wbd_filt2['STATES'].str.contains('MX'))].copy()

print(f"Number of huc12s after filtering Partial Mexican hucs: {len(wbd_conus)}")Number of huc12s before filtering: 101725

Number of huc12s after filtering non-conus huc12s: 86735

Number of huc12s after filtering type: 86734

Number of huc12s after filtering states: 83323

Number of huc12s after filtering Partial Canadian hucs: 82828

Number of huc12s after filtering Partial Mexican hucs: 82547wbd_conus.head()| TNMID | METASOURCEID | SOURCEDATADESC | SOURCEORIGINATOR | SOURCEFEATUREID | LOADDATE | GNIS_ID | AREAACRES | AREASQKM | STATES | ... | NAME | HUTYPE | HUMOD | TOHUC | NONCONTRIBUTINGAREAACRES | NONCONTRIBUTINGAREASQKM | GLOBALID | SHAPE_Length | SHAPE_Area | geometry | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | {B1EF0C55-72ED-4FF6-A3BA-97A87C6A6C47} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 12663.63 | 51.25 | AL | ... | Pond Creek | S | NM | 031401030102 | 0.0 | 0.0 | {8BA048A6-E29C-11E2-8094-0021280458E6} | 0.396873 | 0.004859 | MULTIPOLYGON (((-86.15784 31.42164, -86.15783 ... |

| 1 | {F0D9874D-52BA-4FDC-A5E6-E259B627764D} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 37030.62 | 149.86 | AL | ... | Lightwood Knot Creek | S | NM | 031401030103 | 0.0 | 0.0 | {8BA06091-E29C-11E2-8094-0021280458E6} | 0.845522 | 0.014214 | MULTIPOLYGON (((-86.18406 31.53503, -86.18406 ... |

| 2 | {2E0CB201-5672-45B5-8CA7-A60070122697} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 26011.73 | 105.27 | AL | ... | Poley Creek-Lightwood Knot Creek | S | NM | 031401030302 | 0.0 | 0.0 | {8BA07E87-E29C-11E2-8094-0021280458E6} | 0.663941 | 0.009979 | MULTIPOLYGON (((-86.29029 31.27059, -86.29089 ... |

| 3 | {9D39E120-C6DF-401F-AA8F-1748E9423AA0} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 25800.09 | 104.41 | AL | ... | Yellow River | S | NM | 031401030302 | 0.0 | 0.0 | {8BA0956B-E29C-11E2-8094-0021280458E6} | 0.640934 | 0.009897 | MULTIPOLYGON (((-86.30253 31.45077, -86.30251 ... |

| 4 | {9ED42D2B-5055-43DD-8096-09B30BB23E24} | None | None | None | None | 2013-01-18 07:07:56+00:00 | NaN | 34025.86 | 137.70 | AL | ... | Bay Branch Creek | S | NM | 031401030203 | 0.0 | 0.0 | {8BA0B374-E29C-11E2-8094-0021280458E6} | 0.587969 | 0.013044 | MULTIPOLYGON (((-86.38404 31.37613, -86.38361 ... |

5 rows × 21 columns

# Print columns. Note we use HUC12 as our feature id.

wbd_conus.columnsIndex(['TNMID', 'METASOURCEID', 'SOURCEDATADESC', 'SOURCEORIGINATOR',

'SOURCEFEATUREID', 'LOADDATE', 'GNIS_ID', 'AREAACRES', 'AREASQKM',

'STATES', 'HUC12', 'NAME', 'HUTYPE', 'HUMOD', 'TOHUC',

'NONCONTRIBUTINGAREAACRES', 'NONCONTRIBUTINGAREASQKM', 'GLOBALID',

'SHAPE_Length', 'SHAPE_Area', 'geometry'],

dtype='object')Simply target GeoDataFrame

As discussed with the source GeoDataFrame, here the target GeoDataFrame is simplified to the ‘HUC12’ feature id and ‘geometry’

wbd_conus_simp = wbd_conus[['HUC12', 'geometry']]

wbd_conus_simp.head()| HUC12 | geometry | |

|---|---|---|

| 0 | 031401030101 | MULTIPOLYGON (((-86.15784 31.42164, -86.15783 ... |

| 1 | 031401030102 | MULTIPOLYGON (((-86.18406 31.53503, -86.18406 ... |

| 2 | 031401030103 | MULTIPOLYGON (((-86.29029 31.27059, -86.29089 ... |

| 3 | 031401030104 | MULTIPOLYGON (((-86.30253 31.45077, -86.30251 ... |

| 4 | 031401030201 | MULTIPOLYGON (((-86.38404 31.37613, -86.38361 ... |

Generate weights for interpolating between the target and source polygons.

Here we use GDPTools ParallelWghtGenEngin class. Depending on the resources available one could also use the SerialWhgtGenEngin or DaskWghtGenEngine. This notebook was developed using a computer with 16 processors. A good rule of thumb is to use half the available processors.

from gdptools.weights.calc_weight_engines import ParallelWghtGenEngine

if calc_weights:

wght_gen = ParallelWghtGenEngine()

weights = wght_gen.calc_weights(

target_poly=wbd_conus_simp,

target_poly_idx="HUC12",

source_poly=source_gdf_simp,

source_poly_idx=["nhru_v1_1"],

source_type="poly",

wght_gen_crs=6931,

filename='nhm-to-huc12-weights.csv',

jobs=8

)

else:

weights = pd.read_csv('nhm-t0-huc12-weights.csv')Reprojecting to epsg:EPSG:6931 finished in 60.34 second(s)

Validating polygons

- validating source polygons

- fixing 9991 invalid polygons.

- validating target polygons

- fixing 1 invalid polygons.

Validate polygons finished in 13.9189 seconds

Weight gen finished in 167.9950 secondsAggregate NHM Total Soil Moisture results to HUC12s

Start by grouping the weights by HUC12s, as the interpolation between the source data and the HUC12s will be calculated by HUC12.

# group weights by HUC12 id

weights_huc12 = weights.groupby('HUC12', axis=0)

# Example of HUC12 weights for a given HUC12 id

weights_huc12.get_group('010100020101')| HUC12 | nhru_v1_1 | wght | |

|---|---|---|---|

| 472515 | 010100020101 | 250 | 0.000931 |

| 472516 | 010100020101 | 2455 | 0.000284 |

| 472517 | 010100020101 | 133 | 0.663843 |

| 472518 | 010100020101 | 137 | 0.304852 |

| 472519 | 010100020101 | 286 | 0.000695 |

| 472520 | 010100020101 | 230 | 0.029394 |

The HUC12 weights for the given HUC12 id shows that 6 HRUs intersect the HUC12 and the wght column indicates the fraction of the HUC12 area intersected by the hru id (nhru_v1_1)

Loop through HUC12s and apply weights to interpolate the NHM results

The source NHM data is interpolated to the target HUC12 polygons by iterating over the weights which have been grouped by the ‘HUC12’ and calculating the interpolated value as the sum of the hru valus*weights by using the dot product.

# The name of the NHM variable - see xarray.Dataset representation above.

key='soil_moist_tot'

# Create array to hold the interpolated data dimensioned by (time, HUC12)

agg_values = np.zeros((len(source_ds.time), len(wbd_conus)))

for index, group in enumerate(wbd_conus.HUC12.values):

if index % 10000 == 0:

print(index, group)

try:

weight_table = weights_huc12.get_group(group)

# weight values

w_vals = weight_table['wght'].values

hru_ids = weight_table['nhru_v1_1'].values.astype(int)

hru_vals = source_ds[key].sel(nhru=hru_ids).values

# Use dot product of the values and weights to calculate the interpolated

# value

agg_values[:, index] = hru_vals.dot(w_vals.T)

except KeyError as error: # This catches HUC12s that have no weights.

agg_values[:, index] = np.nan0 031401030101

10000 101900031206

20000 031300110502

30000 102901060405

40000 150100150401

50000 110902040502

60000 120800060602

70000 171003020707

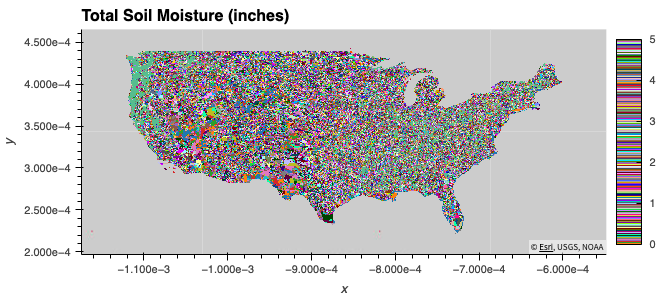

80000 080302090601Create a plot of Total Soil Moisture for the 2020-01-30

Here we use spatialpandas and hvplot. Because the number of polygons is large we get a performance boost using spatialpandas.

import spatialpandas as sp

import spatialpandas.geometry

import spatialpandas.dask

from spatialpandas import GeoDataFrame

wbd_conus['TotSoilMoist'] = agg_values[30,:]

sp_wbd = GeoDataFrame(wbd_conus)

sp_wbd.hvplot(c='TotSoilMoist', rasterize=True, colorbar=True, hover=True, hover_cols=['TotSoilMoist'], clim=(0,5), tiles=hv.element.tiles.EsriTerrain, title="Total Soil Moisture (inches)")

Summary

This use-case shows how to interpolate hydrologic modeling results of soil moisture from spatial units specific to model (hydrologic response units or HRUs) to other spatial units such as more standard hydrologic units (Watershed Boundary Dataset HUC12s in this case). The same process could be used for other polygon areas like counties. To interpolate values from one set of polygons to another, a table of intersection weights containing the fraction of each contributing HRU to overlapping HUC12s is calculated. The interpolation is then calculated as the sum of soil moisture values for each HRU multiplied by the weight for that HRU/HUC12 combination as contained in the weights file. This kind of interpolation is common in hydrologic sciences and this demonstration can be used as a template for other interpolations.