# Typical geospatial python imports

import geopandas as gpd

import spatialpandas as spd

import pandas as pd

import numpy as np

import xarray as xr

import pyproj

# HyRiver imports

from pynhd import NLDI

from pynhd import WaterData

# High-level plotting tools

import hvplot

import hvplot.xarray

import hvplot.pandas

# GDPTools imports

from gdptools import WeightGen

from gdptools import AggGen

from gdptools import ClimRCatData

import warnings

warnings.filterwarnings("ignore")

hvplot.extension('bokeh')Use Cases

gdptools-climater-catalog

Keywords: Terraclimate; HUC12; gdptools; HyRiver; spatial-interpolation

Domain: Climate; Hydrology

Language: Python

Description:

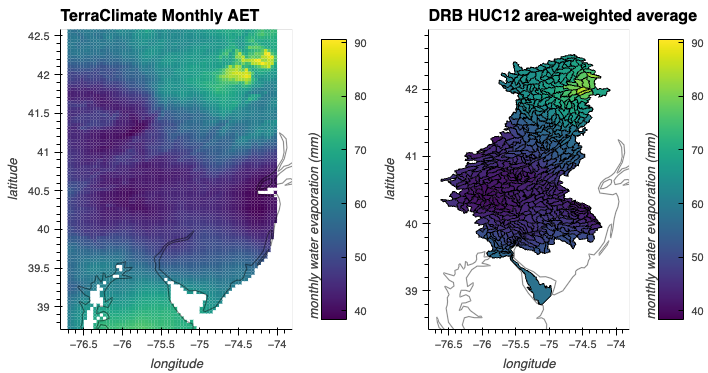

In this notebook we will spatially interpolate a set of variables including actual evapotranspiration (aet), potential evapotranspiration (pet), and Palmer Drought Severity Index (PDSI) from the Terraclimate dataset to a fabric of HUC12 in the Delaware River Basin. The bulk of the processing is done with the Geo Data Processing Tools (GDPTools) python package. A highlight of this use-case is introducing the ClimateR-Catalog of common climate datasets.

Introduction

In this notebook we will spatially interpolate a set of variables including actual evapotranspiration (aet), potential evapotranspiration (pet), and Palmer Drought Severity Index (PDSI) from the Terraclimate dataset to a fabric of HUC12 in the Delaware River Basin. The bulk of the processing is done with the Geo Data Processing Tools (GDPTools) python package. A highlight of this use-case is introducing the ClimateR-Catalog of common climate datasets.

We define the gridded climate data as our source data and the target data, is a set of hydrologic unit polygons representing HUC12s upstream of USGS Gage 01482100 (Delaware River at Del Mem Bridge at Wilmington De). The GDPTools package provides tools for interpolating the source data to the target data using area-weighted statistics. The source data requirements of GDPTools objects are initialized using the ClimateR-Catalog.

Spatial interpolation of climate data as demonstrated here is a common and often challenging geo data process performed while building hydrologic model applications. GDPTools was developed for this common processing need.

Data:

- No data requirements for this use-case.

Getting started

- Download environment-examples.yml

- Create a conda environment:

conda env create -f environment-examples.yml

conda activate gdptools-examplesImport packages

The imports represent a selection of typical packages for geospatial work in python including GeoPandas and XArray among others. We also use the pnhd package from the HyRiver suite of python packages, to create a target set of HUC12 polygons for our use-case. For generating plots of the source, target and the resulting spatial interpolation we use packages associated with hvPlot. The GDPTools packages are ClimRCatData for initializing the data sources, WeightGen for generating a table of weights which are used to generate the area-weighted interpolations using AggGen. These are all discussed in more detail below.

Data requirements for GDPTools.

The general data requirements for GDPTools are defined by the source data (gridded climate data) and target data (GeoDataFrame of polygons), and some additional parameters discussed below. We begin by identifying our source data here using the climater-catalog. The target data can be any set of polygon geometries read by GeoPandas. The three classes used by GDPTools in this tutorial are:

Data Classes:

ClimRCataData: Class to load source and target data

Process Classes:

Each of these classes accepts an instance of the ClimRCataData class.

WeightGen: Class to generate a table of weights. For every polygon in the target dataset this table identifies every grid cell that intersects that polygon and each cells weight, which is the fraction of the total polygon area, that each cell represents. These weights are used to calculate area weighted statistics, which is the method of interpolation or aggredation used in the following class.AggGen: Class to perform area-weighted statistics used to interpolate or aggregate the source data to the target data.

Define the source data using the climater-catalog

Note

The GDPTools documentation provids a table with common datasets in the climater-catlog. The table provides a link to any available description/documentation of the datasets as well as a search id. Another way to gain familiarity with the catalog is to open the json-version with a text editor and search for any keywords of interest.

- Import the climater-catalog from the web into pandas using its parquet version. Looking at the header of the catalog provides an initial view of what’s included in the catalog. Primarily the catalog provides a URL for each asset in the catalog that can be read by xarray to load that particular dataset.

climater_cat = "https://mikejohnson51.github.io/climateR-catalogs/catalog.parquet"

cat = pd.read_parquet(climater_cat)

cat.head()| id | asset | URL | type | varname | variable | description | units | model | ensemble | ... | Xn | Y1 | Yn | resX | resY | ncols | nrows | crs | toptobottom | tiled | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | maca_day | agg_macav2metdata_huss_BNU-ESM_r1i1p1_historic... | http://thredds.northwestknowledge.net:8080/thr... | opendap | specific_humidity | huss | Daily Mean Near-Surface Specific Humidity | kg kg-1 | BNU-ESM | r1i1p1 | ... | -67.064758 | 25.063078 | 49.396023 | 0.041666 | 0.041666 | 1386.0 | 585.0 | +proj=longlat +a=6378137 +f=0.0033528106647474... | True | T |

| 1 | maca_day | agg_macav2metdata_huss_CNRM-CM5_r1i1p1_histori... | http://thredds.northwestknowledge.net:8080/thr... | opendap | specific_humidity | huss | Daily Mean Near-Surface Specific Humidity | kg kg-1 | CNRM-CM5 | r1i1p1 | ... | -67.064758 | 25.063078 | 49.396023 | 0.041666 | 0.041666 | 1386.0 | 585.0 | +proj=longlat +a=6378137 +f=0.0033528106647474... | True | T |

| 2 | maca_day | agg_macav2metdata_huss_CSIRO-Mk3-6-0_r1i1p1_hi... | http://thredds.northwestknowledge.net:8080/thr... | opendap | specific_humidity | huss | Daily Mean Near-Surface Specific Humidity | kg kg-1 | CSIRO-Mk3-6-0 | r1i1p1 | ... | -67.064758 | 25.063078 | 49.396023 | 0.041666 | 0.041666 | 1386.0 | 585.0 | +proj=longlat +a=6378137 +f=0.0033528106647474... | True | T |

| 3 | maca_day | agg_macav2metdata_huss_bcc-csm1-1_r1i1p1_histo... | http://thredds.northwestknowledge.net:8080/thr... | opendap | specific_humidity | huss | Daily Mean Near-Surface Specific Humidity | kg kg-1 | bcc-csm1-1 | r1i1p1 | ... | -67.064758 | 25.063078 | 49.396023 | 0.041666 | 0.041666 | 1386.0 | 585.0 | +proj=longlat +a=6378137 +f=0.0033528106647474... | True | T |

| 4 | maca_day | agg_macav2metdata_huss_CanESM2_r1i1p1_historic... | http://thredds.northwestknowledge.net:8080/thr... | opendap | specific_humidity | huss | Daily Mean Near-Surface Specific Humidity | kg kg-1 | CanESM2 | r1i1p1 | ... | -67.064758 | 25.063078 | 49.396023 | 0.041666 | 0.041666 | 1386.0 | 585.0 | +proj=longlat +a=6378137 +f=0.0033528106647474... | True | T |

5 rows × 28 columns

- Here is a list of all the data columns in the catalog

cat.columnsIndex(['id', 'asset', 'URL', 'type', 'varname', 'variable', 'description',

'units', 'model', 'ensemble', 'scenario', 'T_name', 'duration',

'interval', 'nT', 'X_name', 'Y_name', 'X1', 'Xn', 'Y1', 'Yn', 'resX',

'resY', 'ncols', 'nrows', 'crs', 'toptobottom', 'tiled'],

dtype='object')- Define search terms and create catalog dictionary to use with

ClimRCatData. All the column headers can be searched using the pandas .query() method. Here we search for the “id”, “terraclim”, and several “variables” of that dataset, [“aet”, “pet”, “PDSI”]. The code then creates a dictionary with the variable names as keys and the resulting catalog data for each key as the values of the dictionary. This is then used by GDPTools objects.

_id = "terraclim"

_vars = ["aet", "pet", "PDSI"]

# create list of catalog parameters for each variable

cat_params = [

cat.query("id == @_id & variable == @_var").to_dict(orient="records")[0]

for _var in _vars

]

# create dictionary of variable names and catalog parameters

cat_dict = dict(zip(_vars, cat_params))Example key::value pair

cat_dict.get("aet"){'id': 'terraclim',

'asset': 'agg_terraclimate_aet_1958_CurrentYear_GLOBE',

'URL': 'http://thredds.northwestknowledge.net:8080/thredds/dodsC/agg_terraclimate_aet_1958_CurrentYear_GLOBE.nc',

'type': 'opendap',

'varname': 'aet',

'variable': 'aet',

'description': 'water_evaporation_amount',

'units': 'mm',

'model': None,

'ensemble': None,

'scenario': 'total',

'T_name': 'time',

'duration': '1958-01-01/2021-12-01',

'interval': '1 months',

'nT': 768.0,

'X_name': 'lon',

'Y_name': 'lat',

'X1': -179.97916666666666,

'Xn': 179.97916666666666,

'Y1': 89.97916666666667,

'Yn': -89.97916666666664,

'resX': 0.041666666666666664,

'resY': 0.041666666666666664,

'ncols': 8640.0,

'nrows': 4320.0,

'crs': '+proj=longlat +a=6378137 +f=0.00335281066474748 +pm=0 +no_defs',

'toptobottom': False,

'tiled': ''}Define the target data

In this cell we use clients to several https://api.water.usgs.gov tools:

NLDI

- Tool: USGS NLDI

- Client: pynhd.NLDI

GeoServer

- Tool: USGS GeoServer

- Client: pynhd.WaterData

More examples of using the HyRiver pynhd package can be found here

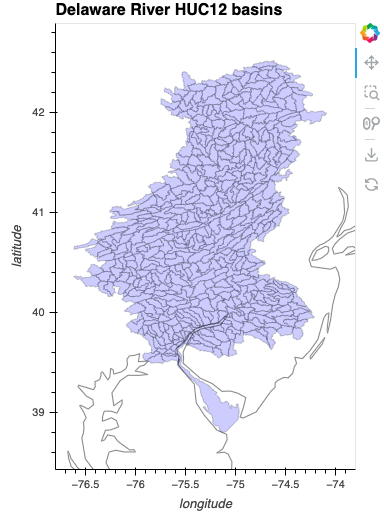

Here NLDI is used to define the upstream basin from a USGS gage on the Delaware River. The Geometry of that basin is then used to retrieve all the encapsulated HUC12s.

# USGS gage 01482100 Delaware River at Del Mem Bridge at Wilmington De

gage_id = "01482100"

nldi = NLDI()

del_basins = nldi.get_basins(gage_id)

huc12_basins = WaterData("wbd12").bygeom(del_basins.geometry[0])

spd_huc12_basins = spd.GeoDataFrame(huc12_basins)- Visualize the extracted HUC12s in the Delaware River Basin

drb = huc12_basins.hvplot(

geo=True, coastline='50m', alpha=0.2, c='b', frame_width=300,

xlabel="longitude", ylabel="latitude", clabel="monthly water evaporation (mm)", title="Delaware River HUC12 basins", xlim=(-76.8, -73.8), aspect='equal',

)

drb

Generate a weights file

ClimRCatData is initialized with the source and target data:

Source Data:

- cat_dict=cat_dict: A dictionary with variable names as keys and climater-catalog data as the values.

- period=[“1980-01-01”, “1980-12-31”]: In this case the WeightGen process class will simply use the first time in the data to define the data. However, in the next code cell, the data are aggregated over the period define here.

Target Data: - f_feature=huc12_basins: The GeoDataFrame of HUC12 basins we defined above - id_feature=‘huc12’: The column identifier used to identify both the target polygons in the weights file, and the interpolated results in the AggGen process class.

WeightGen is initialized as:

- user_data=user_data: The

ClimRCatDatainstance we defined above. - method=‘serial’: One of three methods including: “serial”, “parallel”, or “dask”. When working with very large source and target data, using parallel or dask methods can improve the speed-up of the calculation of weights.

- output_file=“terraclime_wghts.csv”: Set’s the name of the resulting file of weights.

- weight_gen_crs=6931: This is the common, equal area projection that is used to reproject both the source and target data, into an equal area projection for calculating the intersection of the polygons representing the cells of the gridded data with the source HUC12 polygons. 6931 Is a choice we commonly use for the Norther hemisphere.

calculate_weights() method calculates the weights, saveing the terraclime_wghts.csv file and also returning a pandas dataframe of the weights.

user_data = ClimRCatData(

cat_dict=cat_dict,

f_feature=huc12_basins,

id_feature='huc12',

period=["1980-01-01", "1980-12-31"]

)

wght_gen = WeightGen(

user_data=user_data,

method="serial",

output_file="terraclime_wghts.csv",

weight_gen_crs=6931

)

wghts = wght_gen.calculate_weights()Using serial engine

grid cells generated in 0.4124 seconds

Data preparation finished in 1.2177 seconds

Reprojecting to epsg:EPSG:6931 finished in 0.05 second(s)

Validating polygons

- validating source polygons

- fixing 0 invalid polygons.

- validating target polygons

- fixing 0 invalid polygons.

Validate polygons finished in 0.0290 seconds

Intersections finished in 0.4980 seconds

Weight gen finished in 0.5279 secondsView the resulting weights file

wghts_df = pd.read_csv('terraclime_wghts.csv')

wghts_df.head()| huc12 | i | j | wght | |

|---|---|---|---|---|

| 0 | 20402050501 | 70 | 24 | 0.027234 |

| 1 | 20402050501 | 71 | 24 | 0.118993 |

| 2 | 20402050501 | 72 | 23 | 0.025754 |

| 3 | 20402050501 | 71 | 23 | 0.393885 |

| 4 | 20402050501 | 71 | 22 | 0.230850 |

- Inspect the values for a given huc12 id. Note that summing the weights = 1. The weights identify the grid cell by it’s i,j index and the value of the weights is the fraction of the total area of the huc12 of the intersected grid cell.

wghts_group = wghts_df.groupby('huc12')

wghts_group.get_group(20401030203)| huc12 | i | j | wght | |

|---|---|---|---|---|

| 3807 | 20401030203 | 24 | 34 | 0.010206 |

| 3808 | 20401030203 | 21 | 36 | 0.023844 |

| 3809 | 20401030203 | 22 | 35 | 0.023939 |

| 3810 | 20401030203 | 21 | 35 | 0.209007 |

| 3811 | 20401030203 | 20 | 35 | 0.066674 |

| 3812 | 20401030203 | 23 | 34 | 0.195367 |

| 3813 | 20401030203 | 22 | 34 | 0.243702 |

| 3814 | 20401030203 | 21 | 34 | 0.149858 |

| 3815 | 20401030203 | 20 | 34 | 0.011366 |

| 3816 | 20401030203 | 23 | 33 | 0.021598 |

| 3817 | 20401030203 | 22 | 33 | 0.044440 |

Run an area-weighted interpolation of the mean values

Note

More information of the

AggGenclass can be found here.

Once the weights are calculated, we use the terraclime_daily_wghts.csv file generated by the calculate_weights() method as input to the AggGen process class. AggGen process class takes the following input:

- user_data = user_data: The same dataclass define above.

- stat_method = “masked_mean”: see documentation for other statistics available.

- agg_engine = “serial”: There are serial, parallel, and dask engines available

- agg_writer = “netcdf”: There are csv, parquet, and json writers also available.

- weights = “terraclime_wghts.csv”: Weights file generated above.

- outpath = “.”: Output will be written to this directory

- file_prefix = “testing_tc_drb_huc12”: In this case the resulting file will be testing_tc_drb_huc12.nc - a netcdf file.

agg_gen = AggGen(

user_data=user_data,

stat_method="masked_mean",

agg_engine="serial",

agg_writer="netcdf",

weights= "terraclime_wghts.csv",

out_path=".",

file_prefix="testing_tc_drb_huc12"

)

ngdf, ds_out = agg_gen.calculate_agg()Processing: aet

Data prepped for aggregation in 0.7537 seconds

Data aggregated in 0.8322 seconds

Processing: pet

Data prepped for aggregation in 1.1055 seconds

Data aggregated in 0.9126 seconds

Processing: PDSI

Data prepped for aggregation in 0.8593 seconds

Data aggregated in 0.8631 seconds

Saving netcdf file to testing_tc_drb_huc12.ncVisualize the results for the variable “aet”

- Return the DRB HUC12 bounded subset of the TerraClimate variable “aet”.

processed_data = agg_gen.agg_data

da = processed_data.get("aet").da

da<xarray.DataArray 'aet' (time: 12, lat: 93, lon: 65)> Size: 290kB

dask.array<getitem, shape=(12, 93, 65), dtype=float32, chunksize=(12, 93, 65), chunktype=numpy.ndarray>

Coordinates:

* lon (lon) float64 520B -76.69 -76.65 -76.6 ... -74.1 -74.06 -74.02

* lat (lat) float64 744B 42.56 42.52 42.48 42.44 ... 38.81 38.77 38.73

* time (time) datetime64[ns] 96B 1980-01-01 1980-02-01 ... 1980-12-01

Attributes:

units: mm

description: Actual Evapotranspiration

long_name: water_evaporation_amount

standard_name: water_evaporation_amount

dimensions: lon lat time

grid_mapping: crs

coordinate_system: WGS84,EPSG:4326

_ChunkSizes: [ 1 720 1440]- Plot the resulting interpolation of the mean values for the variable “aet” in the DRB HUC12s

spd_ngdf = spd.GeoDataFrame(ngdf.to_crs(4326))

var = 'aet'

time_step = "1980-08-01"

tmin = np.nanmin(da.sel(time=time_step).values)

tmax = np.nanmax(da.sel(time=time_step).values)

print(tmin, tmax)

spd_ngdf['aet'] = ds_out[var].sel(time=time_step)

terraclim_agg = spd_ngdf.hvplot(

c='aet', geo=True, coastline='50m', alpha=1, cmap='viridis', clim=(tmin, tmax),

xlabel="longitude", ylabel="latitude", clabel="monthly water evaporation (mm)", xlim=(-76.8, -73.8),

title="DRB HUC12 area-weighted average", aspect='equal'

)

terraclim_raw = da.sel(time=time_step).hvplot.quadmesh(

'lon', 'lat', 'aet', cmap='viridis', alpha=1, grid=True, geo=True, coastline='50m', clim=(tmin, tmax),

xlabel="longitude", ylabel="latitude", clabel="monthly water evaporation (mm)", xlim=(-76.8, -73.8),

title="TerraClimate Monthly AET", aspect='equal',

)

(terraclim_raw + terraclim_agg)